In the era of AI-driven support, ensuring that chatbots deliver accurate, helpful, and efficient responses is essential for enhancing user satisfaction and operational efficiency. A robust quality score calculation system enables organizations to systematically assess and improve chatbot performance while enhancing the overall SysAid Copilot experience.

By analyzing key factors such as data accuracy, user satisfaction, and issue resolution rates, businesses can identify areas for improvement and ensure the quality score accurately reflects actual interactions, focusing on user issues and feedback.

This process improves chatbot reliability, fosters trust, and reduces the burden on human agents, driving cost savings and enhancing customer experiences.

In this article, you’ll learn which key factors are taken into account when calculating the quality score for the AI chatbot response.

How it works

When providing a user with an answer, the AI Chatbot uses a scoring system starting with a baseline quality score of 50 points. The higher the score, the better the answer, and vice versa.

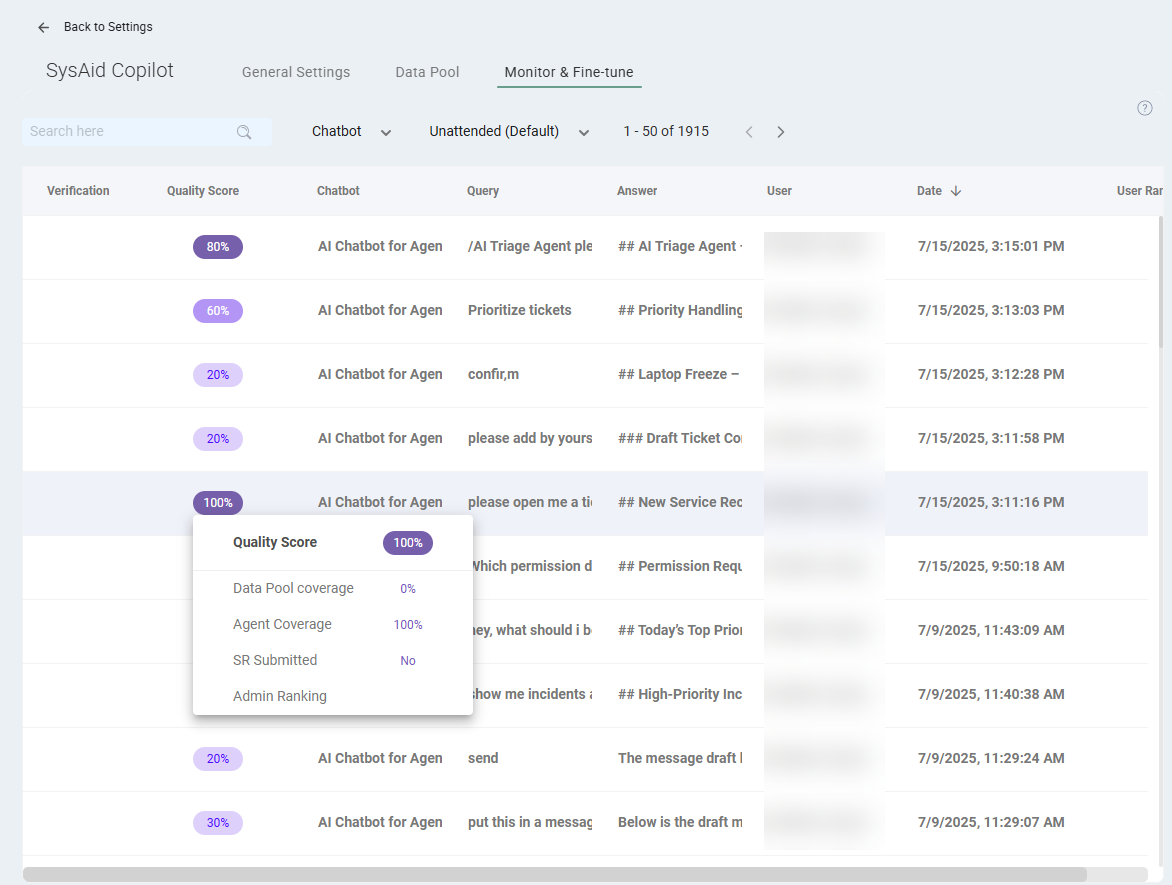

The score changes based on the following key factors:

Data Pool Coverage

Agent Coverage

Admin Ranking/End User Ranking

Service Record Submitted

Self-Servable

Let’s go over what each factor means:

Data Pool Coverage

The calculation considers how much of the formulated answer was taken from the associated data pools.

Points are deducted if less than 30% of the answer is taken from the data pool.

Points will be added if 50% or more of the answer includes information from the data pool.

Agent Coverage

The chatbot often runs various AI agents when answering a user. This includes executing functions and AI agents like creating or closing a service record or the AI asking the user for additional information, such as priority for the service record. These actions cannot deduct points; only add points. If an AI agent was executed, the overall score of the response will increase.

Admin Ranking/End User Ranking

This is the thumbs up/thumbs down indication that the users gave the answer:

Points are deducted when the user gives the answer a thumbs-down

Points are added when the user gives the answer a thumbs-up

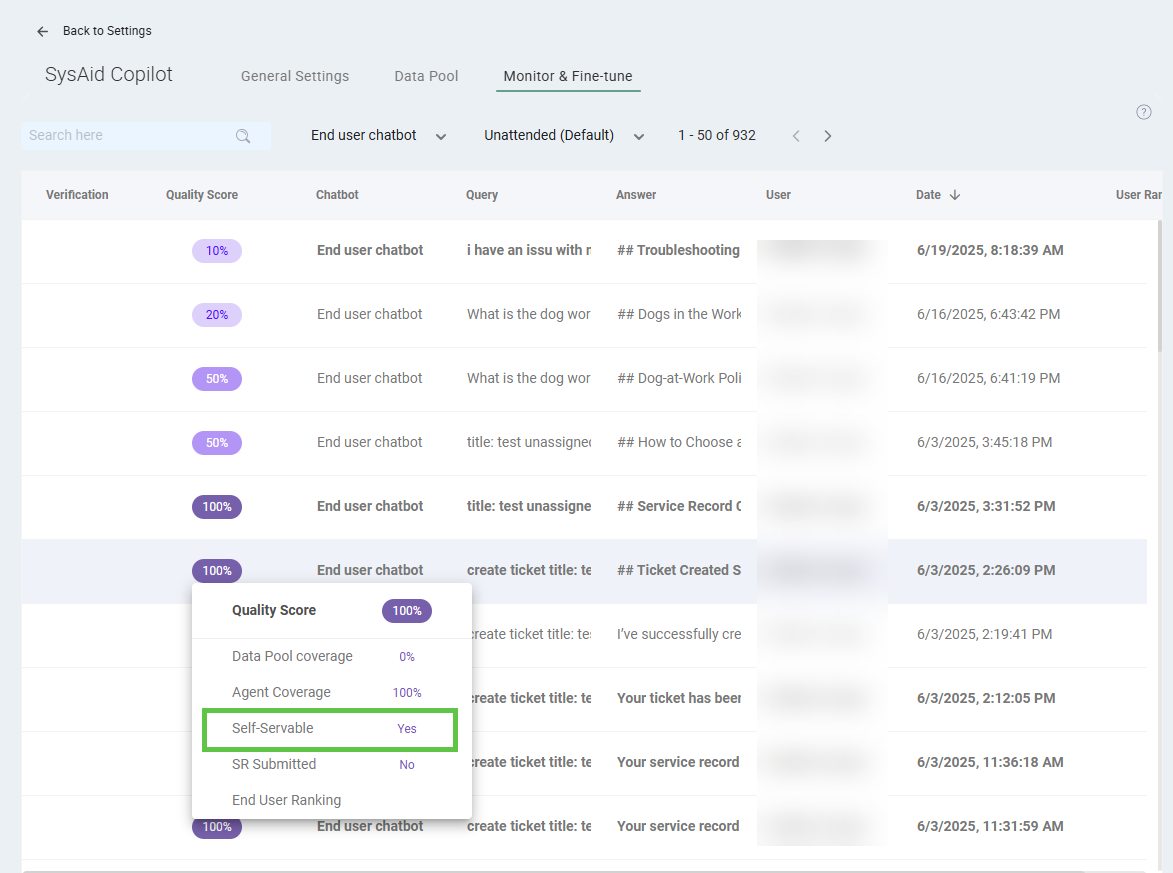

Self-Servable

This calculates how self-serving the AI chatbot’s answer is, meaning how likely the reader is to solve their issue using it. The more detailed and focused the answer, the more points this response will receive.

When the self-service rate is high, and no service record is created, the interaction is designated as self-serviceable and marked with a “Yes” on the Monitor & Fine-tune page.

Service Record Submitted

Points are added if an issue is resolved without agent intervention. This includes resolutions directly from the chatbot response or user actions that close tickets without needing an agent.

Points are deducted if a self-servable query results in creating a service record

Points are added if a self-service query doesn’t result in creating a service record

Final score calculation

The final score ranges from 0% to 100%, and the higher the score - the more accurate the answer.

This scoring system rewards accurate responses, successful self-service, and positive user experiences while penalizing low data pool coverage and the creation of unnecessary tickets.

The quality rate balances context understanding, function execution, user satisfaction, self-service effectiveness, and appropriate ticket creation.

Chat pleasantries:

Chatbot answers such as “Hi,” “How are you?” and “How can I help you?” are classified as pleasantries and automatically marked as “Irrelevant.” They do not receive a quality score calculation as they are not an integral part of the exchange.